Server equipment

ComBox Technology is a manufacturer and distributor of server hardware for execution (inference) of neural networks in data centers.

4xVCA2 server

Server for the execution of neural networks from IP cameras based on 4x Intel VCA2 for the Enterprise segment.

Server for the execution of neural networks and transcoding of video streams for the enterprise segment. Up to 180 Full HD streams, 15 FPS in the recognition of license plates, brands and models of vehicles at speeds up to 250 km / h.

Specification:

- Platform Supermicro 1029GQ-TRT with power supply 2 kW.

- Intel VCA 2 (VCA1585LMV), 4 pcs.

- Memory modules in Intel VCA2, Kingston DDR4 SO-DIMM 8Gb, 24 pcs.

- Memory modules in platform, Kingston ECC REG 16Gb, 8 pcs.

- SSD M.2 Intel 660p Series, 1Tb, 1 pc.

- CPU Intel Xeon Silver 4414 Skylake 2,2GHz, LGA3647, L3 14080Kb, 2 pcs.

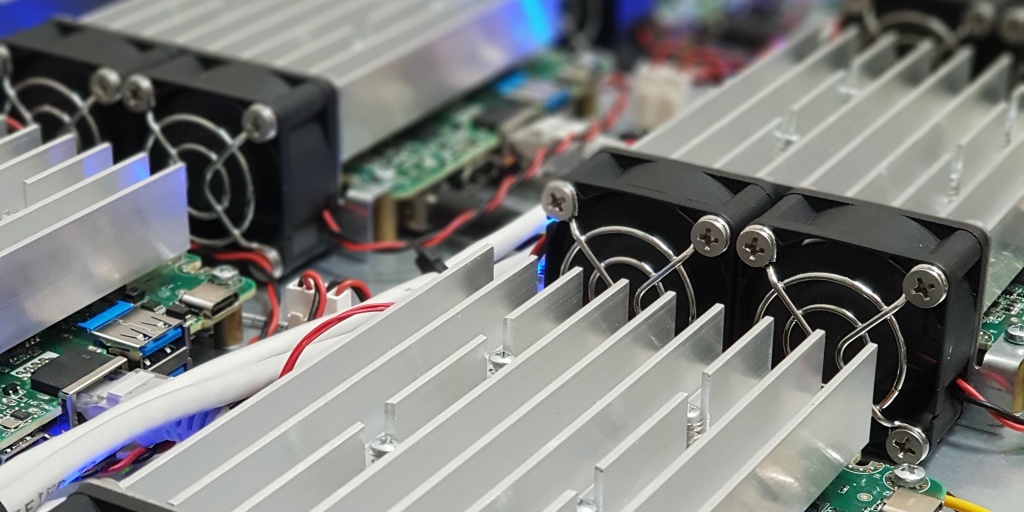

8xNUC server, rev.1

Server for the execution of neural networks from IP video cameras based on 8x Intel NUC for the corporate segment.

Server for execution of neural networks. Corporate segment. Up to 80 Full HD streams, 15 FPS in recognition of license plates, brands and models of vehicles at speeds up to 250 km / h.

Specification:

- Intel NUC8i5BEK (without case with modified cooling system), 8 pcs.

- Memory modules DDR4 SO-DIMM AMD Radeon R7 Performance, 8 Gb, 8 pcs.

- Additional memory modules DDR4 SO-DIMM Kingston, 4 Gb, 8 pcs.

- SSD M.2 storage device WD Green, 240Gb, 8 pcs.

- 1U body (own production), 1 pc.

- Router MikroTik RB4011iGS+RM (without case with modified cooling system), 1 pc.

- Connecting wires (patch cords), 11 pcs.

- Power supply IBM 94Y8187, 550W., 1 pc.

The server is a 1U solution with N pcs. SBCs with inference accelerators located inside, which are interconnected at the network level. The assembled prototype uses 8 pcs. Intel NUC8i5BEK and Ethernet 1000 BASE-T. The GPU acts as an inference accelerator and can be used in conjunction with a general-purpose processor to optimize useful performance.

The main purpose is the combined inference of neural networks on the GPU and CPU. Inference is a continuous execution of neural networks on the final hardware and software device.

Nowadays specialized FPGAs, GPUs and general-purpose CPUs are used for inference. Current solutions for CPUs are server motherboards with 1 or 2 slots for installing processors, for example, the Intel Xeon family. In the case of using a CPU, the inference is executed on logical processor cores, the number of which is equal to the number of physical cores or, in the presence of Hyper-threading, doubled. Modern multi-core processors are very expensive, and there are no industrial solutions based on 1 motherboard with 3 or more slots. Inference on the GPU is significantly more efficient than inference on the CPU, and the combined use of CPU and GPU allows you to increase performance by 3-5 times. As part of our 1U solution, NxSBC managed to significantly reduce the cost of inference per stream by using SBC with GPUs combined at the network level (NAT). When installing 8 SBCs in 1U, 64 logical cores and 8 GPUs are obtained, which is the best indicator in this form factor.

One of the options for using the solution is to recognize objects obtained from a video stream "on the fly" (object analytics). The input, in this case, is most often streaming data. N streams can be directed to 1 SBC, which are further distributed at the network level between the final executive devices to carry out useful work (inference).

Server composition: 1U RAC case, 8xNUC8BEK by Intel with GPU and an air active cooling system upgraded for the server format, 1 router 1 Gb / s, 8 ports inside, 2 outside (main uplink and backup input), 600W power supply, active air cooling control system.

One server contains 8 general purpose 8th Gen Intel Core i5 processors and 8 Iris Plus 655 GPUs. A single server provides 64 general purpose logical processor cores and integrated GPUs for complex tasks. All devices boot over the network without using HDD. All events in the system are logged and transmitted to the operator via the messenger.

Advantages of the solution:

- High density CPU and GPU for server solutions (32 cores, 64 streams at 8CPU and 8GPU in a 1U body).

- Low cost with high CPU and GPU density per 1U.

- Low power consumption (up to 500W at 100% load).

- Hot standby computing nodes at the network level.

- Hardware decoding of video streams.

- Ability to reserve the power supply.

- Ability to share inference between CPU and GPU.

- Ability to work with different data types simultaneously (INT8/FP16/FP32).

- Availability of a visual system for monitoring the state of computing nodes.

- Возможность соблюдения SLA до 0.9999.

- The presence of a centralized control system for computing nodes.

- High-performance hardware coprocessor for stream decompression and compression.

Examples of using the server in the inference of neural networks:

- face recognition (identification in the ACS, formation of gender and age portraits in retail, search activities, etc.);

- recognition of vehicle brands/models (counting, statistics, additional meta to license plates);

- license plate recognition (in photo / video fixation systems, search activities);

- passenger traffic calculation (load accounting, number of incoming/outgoing passengers).

8xNUC server, rev.2

Updated version of a server, based on 8 Intel NUCs, for neural network inference running Intel OpenVINO. Main difference:

- 2 PSUs with hot-swap

- Hot-swap computing modules

- Front panel display and control of compute nodes

Download product specification for ComBox NUC server

PCIe 64xMyriadX Board

Card for inference of neural networks in data centers based on Intel MyriadX chips. Form factor - PCIe x8, 8 blades with 8 MyriadX chips each. Management and inference is performed under Intel OpenVINO.

Download product specification for ComBox x64 Movidius Blade Board

This solution is a full-size PCIe x4 carrier board with 8 blades, each of which contains 8 MyriadX MA2485 chips. In fact, this is a blade system on the PCIe bus, where from 1 to 8 strips of 8 inference accelerators each can be installed within the carrier board. The result - is an industrial scalable, high-density VPU solution for the Enterprise segment. All blades in the system are displayed as multiple HDDL cards with 8 accelerators each. This allows you to use a variety of both for the inference of one task with many incoming streams, and for many different tasks.

The solution uses PLS for 12 PCIe lines, 8 of which go to 8 blades, one line for each, and 4 for the motherboard. Next, each line goes to a PCIe-USB switch, where 1 port is used for connection, and 8 are forwarded to each connected MyriadX.

The total power consumption of a board with 64 chips does not exceed 100 W, but it is also allowed to connect the required number of blades in series, which proportionally affects power consumption.

In total, within one full-size PCIe board, we have 64 Myriad X, and within the server solution on the platform, for example, from Supermicro 1029GQ-TRT, 4 boards in the 1U form factor, i.e. 256 Myriad X chips for inference in 1U.

If you compare the solution based on Myriad X with nVidia Tesla T4, it is reasonable to consider the ResNet50 topology, in which the VPU gives 35 FPS. In total, we have 35 FPS/Myriad X * 64 pieces = 2240 FPS/Board and 8960 FPS/server 1U, which is comparable to the performance of batch=1 nVidia Tesla V100, while the cost of the accelerator on Myriad X is much less. Not only the cost shows the feasibility of using Myriad X in inference, but also the possibility of parallel inference of various neural networks, as well as efficiency in terms of heat generation and energy consumption.

Back to main page